Search Waypoint Resources

-- Article --

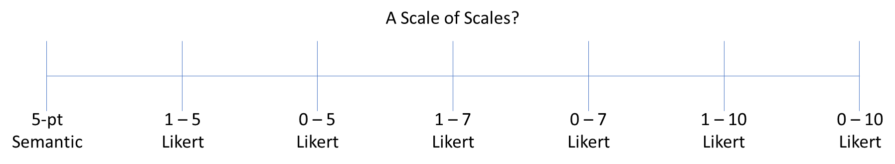

Stop Debating the Survey Question Scale: Why a 0-10 Scale is Your Best Option

Posted on January 10, 2017

I’m frequently asked about scales for rating questions on surveys… “Should we use a 5-point scale, a 7-point scale, a 10-point scale, or an 11-pt scale?” “Should we use semantic anchors at every option, or just numeric, Likert scales?” Etc. Of course, there is no one-size-fits-all, and proper writing of the survey questions is a generally a much more important exercise. That said, my default assumption starts with always using a 0 – 10 scale for rating different attributes. Here are the general reasons why my default assumption is to always use a 0 – 10 scale for rating attributes on customer feedback questionnaires:

- Anchors: For web-based surveys, people generally don’t read the text anchors – they go through the survey quickly – and the science shows us that people know that a rating of 0 is the bad end on a 0-10 scale. Using 1-10 is ambiguous… is a 1 the best rating, or is a 10 the best? And for phone-based surveys, you should not only measure the amount of time it takes for the interviewer to completely read all the different options, but also allow for the respondent to ask the interview to repeat the scale labels when they can’t recall all the options. Time is money, not just for you, but also for your customers.

- Data diversity: Using 1-5 scales doesn’t yield sufficient diversity in the data to enable key optimal driver analysis. Using a 5-pt scale, scores tend to cluster around 3 and 4 (and there’s a huge difference in most peoples’ minds between a 3 and 4 on a 5-pt scale) so it’s difficult to discern the true drivers. In the customer’s mind, there’s a difference between a rating of 6 and a rating of 7 (for example) that you can’t capture on a 5-pt scale. So, on an 11-pt scale (i.e. 0 – 10), you’ll get a much broader spread of the results yielding better predictive analysis.

- Converting Textual scales: When doing quantitative analysis, the ratings provided by customers must always be converted to a number. If you’re asking the customer to rate something you should just ask them the number, otherwise you must convert their response into a number for them, which may not have been the number the customer would have selected. For example, you might convert a customer’s selection of “Very Bad” to be a numeric rating of 1. But that doesn’t necessarily mean that the customer would have selected a 1… why convert it for them instead of just asking the real question the first time? Your customers are all sophisticated enough to know how numeric scales work (which wasn’t necessarily the case decades ago at the origin of this work).

- Consistency: The research and science behind Net Promoter found a 0-10 scale to be the optimal method for that question. Therefore, if you’re using NPS you’re using 0-10 for the Recommend (NPS) question anyway. Changing the scale in the middle of the survey adds no value to you or the customer, and just takes the customer more time to read and interpret. Your goal should be a ~3-minute questionnaire, so with that little “real estate” you really don’t want to spend that time on scales, and rather spend the time on getting feedback.

- Mid-point: Using an odd-numbered scale (0-10, for a total of 11 options) provides a mid-point at 5, i.e. 5 options above the “5” and 5 options below it, making it easier (and less time) for customers to rate.

- Top box method: Consider this example where 10 people respond to a question on an 11-pt scale: One person scores a 1, one person scores a 2, one person scores a 3, and so on up to 10. The average score for these 10 people is 5.5. What does that tell us? Nothing, which is why analysts often provide standard deviation and other statistical techniques with their results, which are lost on most people (who has time for all that?). So we know averages obfuscate (I’ve written about this on the blog). Instead, B2B researchers are standardizing on a “top box” scoring method, showing what percentage of respondents selected the Top “N” box (e.g. what percent of people selected a 9 or a 10 on the 11-pt scale). This method provides meaning – in the example, we have a Top 2 Box score of 20%, telling us that 20% of the respondents scored a 9 or 10… meaning we need to improve the experience for those 8 people that missed expectations, and provides meaning on its own without a lesson in statistics. Now, related to item #2 above, if you only had a 5-pt scale, where would you set the bar… is a rating of 4 a “desired” level of performance? Most would say they want to know how many 5’s they get, forcing a measure of Top 1 Box, which is a very high bar. But on a 11-pt scale you can very well set the bar at a 9 and measure “Top 2 Box” where a rating of 9 is generally considered a-more-than-acceptable level of performance.

- And finally, although the scale is important, I don’t think the debates around rating scales are worth the time. Don’t we have better things to do, like driving improvement?

What do you think:

- Have you uncovered other reasons to use something other than a 0 – 10 scale that I’ve not addressed?

- Is this worth all the debate, or are you better off accepting norms and getting on with the real work?